Prototype 1 Development and Quarters

Week 3 Updates

- first prototype updates

- quarters

- iterations and learning takeways

Prototype Updates

It’s hard to believe that we are already three weeks into the semester. This week, we finished our first prototype and had our Quarters meeting with faculty on Wednesday, 9/14. We received a lot of valuable feedback that we will share. But first, let’s talk about the prototype.

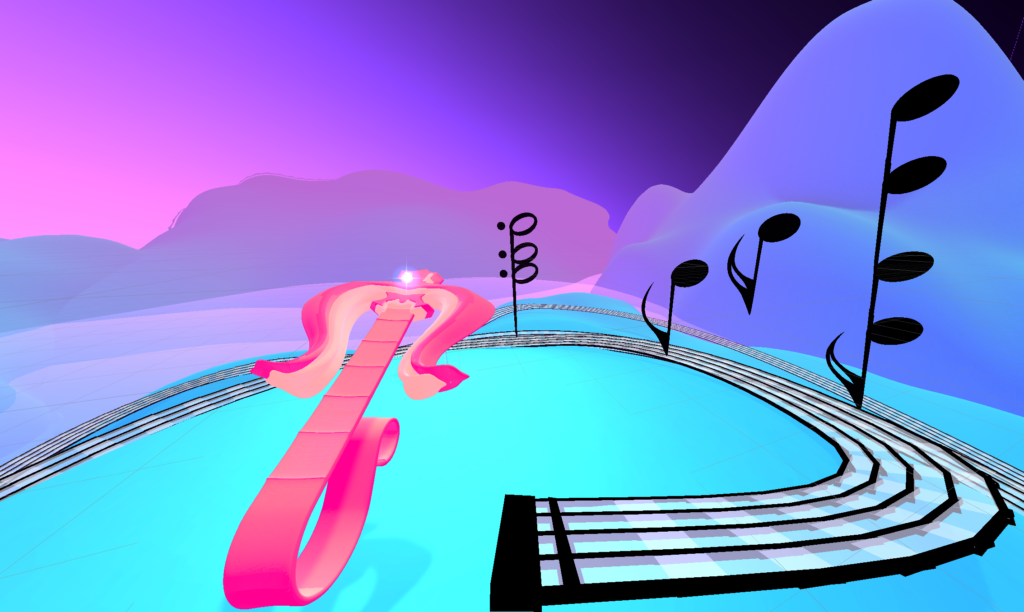

The video above demonstrates the gameplay of our first prototype. As you may recall from our week 2 blog post, the experience goal is that guests learn about chord structures by resolving dissonant chords via a pullback mechanism that allows them to select scale degrees within the key [this experience is in the key of C Major].

Due to time constraints, we were unable to implement a wave manager and enemy movement so that various chord structures [triad (root position), first inversions, and second inversions] rush towards the player in a tower-defense like manner.

- A pullback mechanism where guest selects scale degrees by pulling their hand forward or backward.

- Accompanied by visual feedback —> UI overlay that signals which scale degree the guest is on.

- Accompanied by sonic feedback –> single note one-shot that pitch-shifts as the guest moves their hand forwards and backwards.

- Three different enemy types.

-

Enemy chord structure logic:

Enemy chord structure logic:

- Enemies are missing/having dissonance in one of their notes and vocalize their discordant chord.

- Enemies resolve when guest fires the missing scale degree, and enemies consecutively vocalize their resolved chord.

Generally, we found that our first prototype was successful at giving guests an understanding of the relationship between pitches through the pull back mechanism which provided real-time feedback of what each scale degree sounded like. This mechanism was excellent for the VR space – it felt both gratifying to essentially play a scale by pulling back/forth and satisfying to shoot musical notes. However, a drawback of our prototype was that it was confusing to actually learn about the three different types of chord structures. We believe that this was in part due to A) a lack of proper on boarding, and B) confusing and complex representation of chords. Our enemies were a combination of both literal and abstract. They were literal because they were actual notes with proper spacings, applied to represent each unique chord structure. They were abstract because they floated in space without a proper musical staff background, and numbers/color were used to indicate scale degree. Because we are targeting university students with little theoretical knowledge, we should’ve made the enemy representations more abstract. Additionally, because there was no onboarding process, faculty didn’t know what the game rules were, and what the numbers on the chords meant – an issue relating to both the onboarding process and the representation of musical elements.

Quarters Feedback

- Pertaining to High Level Design

- High Level Purpose needs more clarity – too many purposes floating together, need to pick one direction.

- Are guests emerging as creators or listeners?

- Focus on one transformation.

- What is your definition of music theory?

- How is success measured?

- What is the onboarding process for each experience?

- Why not use a keyboard as an interface?

- Worry about genre pillar in design madlib as a hinderance to experimentation

- Prototype Feedback

- Difficult to understand what the guest must do.

- The representation of music is confusing – it is kind of abstract but kind of literal.

- What are guests learning?

Moving Forward

Following the faculty feedback, we spent the next few days reflecting and discussing. First, we unified our series of lingering and nebulous high level purposes into a singular question: Does Lyraflo foster musical curiosity? This essential question will be used in the future as a frame to make decisions – if the idea, concept, mechanic, etc says yes to the question, then it will be added to the experience. If it says no, then it will not be added. Next, we turned to and discussed our transformation. During our meeting with one of the faculty, Dave Culyba, we were asked what we thought guests could learn and feel in a 2-3 minute gameplay session. This focus on time and effect, was something that struck an immediate chord with us. Because we are building rapid prototypes, it is important that we not only scope small, but also that we design a feasible transformation that can be reached in a short gameplay session. We hope that our short experiences can foster guests’ curiosity, so that they emerge from the game with an interest to further engage with theory. This engagement can manifest in several different ways – guests could be more active listeners, or they could begin having conversations with their friends about music in more theoretically driven terms, or they could begin composing music. The point is, our goal is to quickly show the fun in a theoretical concept, so that guests emerge with a budding curiosity to discover and learn more about the world of music theory and why songs sound and feel the way that they do.

Future Prototypes

- Adding an onboarding process in the form of a short video or presentation that quickly gives guests a contextual understanding of what they will be doing.

- The importance of abstraction – avoid using literal sheet music elements when representing principles.

- Scoping down our prototypes – 2 to 3 minute experiences.

- Designing for the VR space.

Possible Iterations to Prototype 1 and Valuable Learning Takeways

This prototype has a lot of potential for future iteration. During a meeting with one of our SMEs, Kristian Tchetchko, he brought to our awareness the importance of sound priority. Within a tower defense-like game, many enemies spawn over time and rush towards the player. There is a distinct sense of chaos. Within our experience, this chaos was sonnified through the dissonant vocalizations of the chord monsters. It was difficult for guests to actually attain an understanding of what each chord sounds like because they were enveloped in cacophony from all sides and angles. This problem could be solved by adding a lock-on mechanic. The lock-on mechanic would duck or high-pass extraneous sounds when the guest locks onto a chord monster. This would allow for that particular vocalization to take priority so that the guest can really hear the idiosyncricities of each chord structure and identify where the dissonance lays. Additionally, we could make this mechanic freeze or slow time, allowing for the guest to really focus on the chord and not worry about enemies rushing while locked in. Kristian additionally brought up the importance of focusing on the theoretical concept, and making sure that gameplay does not hinder the guest’s ability to learn. In some tower defense games, players have to aim in order to strike targets. In our experience this is the case. However, it is bad design if the aiming takes away from achieving the learning goal. For instance, say the guest is being rushed by a triad enemy [1,3,5] and the 1 is dissonant. They pullback a 1, the successful scale degree to resolve, and take aim at the chord monster. Unfortunately, they miss and then lose. Our game is first and foremost an experience to learn about musical elements. Players should not be penalized by gameplay constituents when they have picked the right answer. We could avert this problem by adding an aimbot, or auto lock, that facilitates aim and allows guests to focus on the music first and foremost. The whole point of the game genre is to serve as a template that makes the musical lesson more engaging and fun – the gameplay should never become a distraction or point of focus.