Technologies -

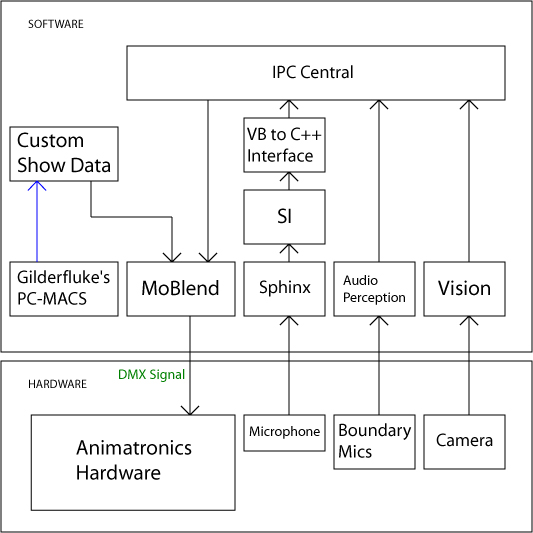

Sphinx is open source voice recognition software available form the CMU speech group. This software is designed to translate voice input into text. "Doc" Beardsley first uses Sphinx to recognize the words of the speaker, and passes on the results to the discussion engine to be processed for a response. Sphinx operates through use of language models to understand how textual words are pronounced and which words are commonly joined.

Once "Doc" Beardsley has received a comment from a guest (through either text or voice input), this input is processed by a discussion engine. This software uses synthetic interview to do the majority of processing for comprehension. The engine builds upon the results of this processing and decides how to respond. It includes ten modes of interaction, seventeen comments types, and a robust comment structure to allow flexibility in the construction of a response. As a result, Doc can answer questions, discuss a topic, gather specific input (like your name or a topic) and respond accordingly, initiate comments, exhibit his mood, detect inappropriate words, and even burp, hiccup, sneeze, and snore (his personal favorite).

Synthetic interview is a software technology created to understand the meaning of text. The program attempts to match its input to a pre-constructed list of possible questions a user may enter. Synthetic interview generates a best match and an associated score for accuracy. "Doc" Beardsley uses this software to process comments from a guest. The Interactive Animatronics Initiative would like to thank the developers of this technology, Scott Stevens, Don Marinelli, and Mike Cristel for allowing its use in "Doc" Beardsley.

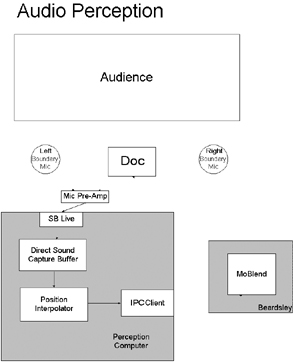

The purpose of Audio Perception is to allow Doc to interpolate the directional position of audio sources, so he can demonstrate greater life-like interaction. Doc uses this data to turn in the direction of a speaker with his head and eyes.

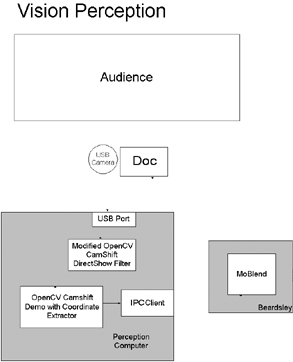

Vision Perception gives Doc the ability to interpret the basic positions and motions of a person within his view. This data increases the illusion of life by allowing Doc to follow people interacting with him, with his head and eyes in both the X and Y planes.

|

Hardware

Software |

|

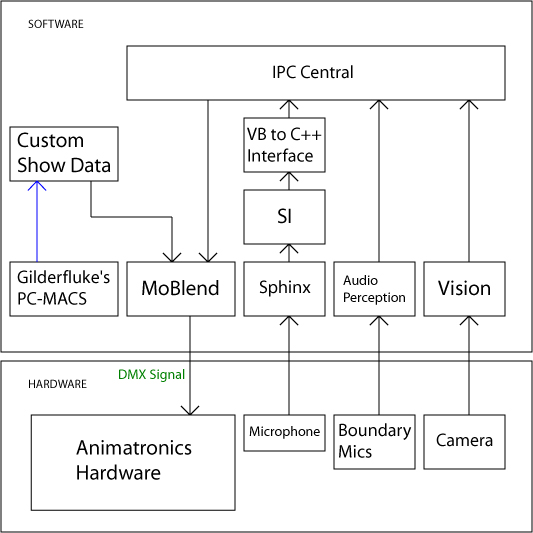

Our motion blending system (or MoBlend for short) is what controls all the motors in the animatronic character. MoBlend is also at the heart of environmental perception because it intelligently "blends" real-time vision and audio perception with the pre-recorded animatronic shows, communicating through IPC. For example, in our project, Doc can respond (by turning his eyes and head) to sources of motion and audio while talking (playing a pre-recorded show). This system attempts to make the animatronic motion as natural-looking as possible. For instance, the eyes lead the neck when turning the head. His head also accelerates and then decelerates into position, just as humans do. These effects are subtle yet crucial in conveying the illusion of life and minimizing the mechanical style of the average animatronic character.

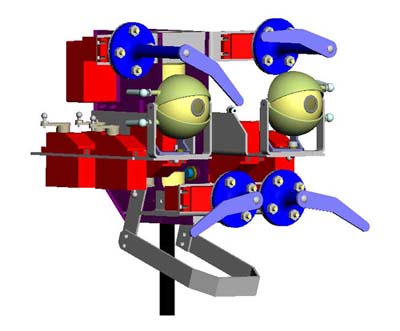

Docís head was designed and built during the first cycle of the project (November & December, 2000). The animatronic design has fourteen degrees of freedom and was completed using SolidWorks mechanical design software. Neck rotate, nod, and tilt functions were added during the second cycle. Upper eyelids were added third cycle. Doc's mechanical design utilizes Multiplex mc/V2 servo motors, the industry leader in long life and smoothness of motion. The programming of the animatronics for this character was achieved using Gilderfluke animatronic software.

Animatronic Functions:

Eye Pan (2), Eye Tilt (2), Eyebrow (2), Mustache (2), Eyelids (2)

Jaw & Neck Rotate, Neck Nod, and Neck Tilt

The Interactive Animatronic system that beings Doc to life consists of several separate programs linked together by a communication system called InterProcess Communication (IPC) developed by Reid Simmons at Carnegie Mellon's Robotics Institute. Each program acts as a software client that sends information to an IPC central server in the form of communication messages."

Research Lessons & Difficulties -

Since our team is working within a new medium involving numerous technologies, we have had many lessons and difficulties to deal with along the way.

The integration of separate technologies is very time consuming and error prone. Particular difficulties arise when integrating animatronic control software and hardware with our custom software system and designing ways to efficiently communicate between processes such as speech recognition, MoBlend, and Synthetic Interview.

"Doc" Beardsley provided valuable lessons concerning the way in which guests are likely to interact with an animatronic character. Since most individuals are used to only seeing these characters perform, very few expected to be able to simply talk to the character. Most audience members must be encouraged to do so and rarely attempt to of their own volition. Oddly enough, the biggest fear seemed to be the embarassment of talking to a robot that might now talk back.

Preserving the illusion of life takes hard work. Keeping the character intact and distracting a guest from the many technologies involved is an active process. Typical audiences are hesitant to ignore the nuance of robotic characters. Questions like "Do you know you're a robot?" and "How many wires do you have inside?" are not the kind of things one might ask an Austrian inventor.

Regarding the way audiences typically interact, we found that most guests are hesitant to ask questions in public. The average user in a group seemed to fell as if they too would be "out on stage" when asking a question. However, in groups of less than three or so people, the questions abounded. We can imagine that many guests may refrain from asking too many questions for fear of being embarassed in return. As a consequence for this ramification, we have been designing structured experiences to complement the open-ended conversations.

Technology

The Interactive Animatronics Initiative (IAI) is a joint initiative between the Field Robotics Center (FRC) and the Entertainment Technology Center (ETC) at Carnegie Mellon University (CMU).

Carnegie Mellon University, Entertainment Technology Center (c) 2001