Interbots follows the philosophy of technological transparency: allow technology to support the experience, but not become the experience. While much of the work we have done is highly technical, our end goal is a solid and engaging experience. We do not implement technology for technology's sake, and would rather not support a feature or capability if we feel it does not contribute to the character we are trying to create. Guests should forget they are interacting with an autonomous hunk of wires, metal, and plastic. They should see the character in front of them, not the robot. No matter how impressive and complicated the technology becomes, if a believable personality has not been created then an audience will not be fully engaged.

Until now, the vast majority of animatronic research has been done by computer scientists and roboticists. While we very much value the contributions of these disciplines (and indeed have members on our team who specialize in them), we believe that compelling content for such characters should be created by artists, who have the most experience in creating a connection with an audience. Therefore, the development of simple, intuitive content creation tools that allow non-technologists to author content for entertainment robotics is essential.

Our approach is unique primarily because it takes content development out of the hands of computer scientists, and places it into the hands of writers, animators, sculptors, painters, and other artists. The beauty of the Interbots Platform is that it provides an interface between artistic vision and technological implementation-- it allows designers to explore animatronic expression of personality and emotion without the construction of a single mechanical component. The Interbots Platform design, construction, and authoring process is described below:Design, Construction, and Authoring Process

Having the liberty to custom-design an animatronic figure for our purposes, we started our development process in character design. The approach was similar to the one taken for film or video-game characters, but with some notable differences. A blue-sky conceptual phase led to three varied concepts-- one of which was a robot in form.

|

|

|

Kitchit (a lemur) |

Eibie (a glowworm) |

Zineefer (an alien robot) |

Iteration led us to move forward with the robot figure after merging the best qualities from all three designs. Pencil sketches established a design style, which was then fleshed out during the creation of a 3D model in Alias Maya-- an industry preferred modeling and animation package.

|

|

|

|

At this point physical constraints became a notable concern. When designing a virtual character, mass, realistic joint sizes, and other mechanical factors do not need to be considered. For animatronic characters, real-world physical limitations require function to supercede form. We paired a traditional 3D artist with the industrial designer who built the robot in order to ensure that the design was both functional and appealing. A copy of the 3D model was imported into SolidWorks, a mechanical design application. The placement of the internal joints, motors, and armatures occurred in SolidWorks parallel to iterations on the cosmetic look of the character in Maya.

|

|

|

|

Fabrication began once the external cosmetic shell and internal armature had been finalized in virtual space. Engineering drawings from SolidWorks were used to hand-machine the armature, while the shell pieces modeled in Maya were exported and fabricated on a 3D printer. The pieces from the 3D printer were not nearly durable nor lightweight enough to use directly on the robot. This problem was solved by making silicone molds of the printed pieces and casting final parts out of a fiberglass-reinforced resin.

|

|

|

|

These fiberglass parts can stand up to the challenge of being used on the robot, and have the added benefit of being a one-to-one match of the 3D Maya model. This allows 3D animators to leverage Maya's excellent animation tools (with which they are already familiar) to create lifelike movement for the physical robot. Our Maya plug-in exports the animations to the physical servos so that the animator is not required to have any knowledge of how the machinery works.

After the internal armature was machined and fiberglass pieces of the external shell were fabricated, the character (now named "Quasi") was assembled and attached to the kiosk, which was designed and constructed in parallel with the character. Lastly, Quasi was networked to the kiosk's sensors and controls, and was interfaced to the show control system.

|

|

|

|

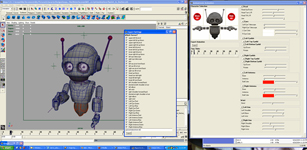

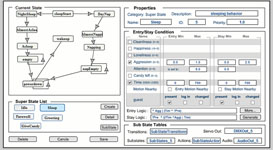

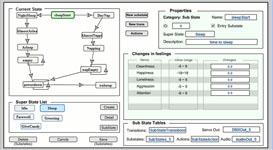

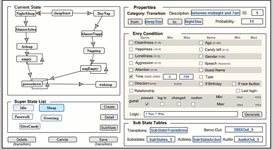

While half of the team was designing and constructing the character and kiosk, the remaining team members' attention was focused on creating a system of authoring tools that allows for rapid development of content. Content is a vital part of any platform and should not necessarily be handled by the same people who designed and created the mechanical components. Realizing this, we leveraged the power of Maya and Director, two applications most artists are very familiar with, to create the primary content authoring tools for animation and interactivity. For the more complex task of programming Quasi's artificial intelligence, we developed an intuitive graphical authoring tool in Flash.

|

|

The Maya Plug-in |

The Behavior Authoring Tool |

|

|

The Behavior Authoring Tool |

The Behavior Authoring Tool |

Combined, these tools allow practically anyone to create a Quasi experience in a very short period of time. This was actually put to the test when Quasi was used in the Building Virtual Worlds course at Carnegie Mellon University. Interdisciplinary teams of four students who had no prior experience with the Interbots Platform were able to develop new and original content for Quasi in two-week development periods.