Playtest Week

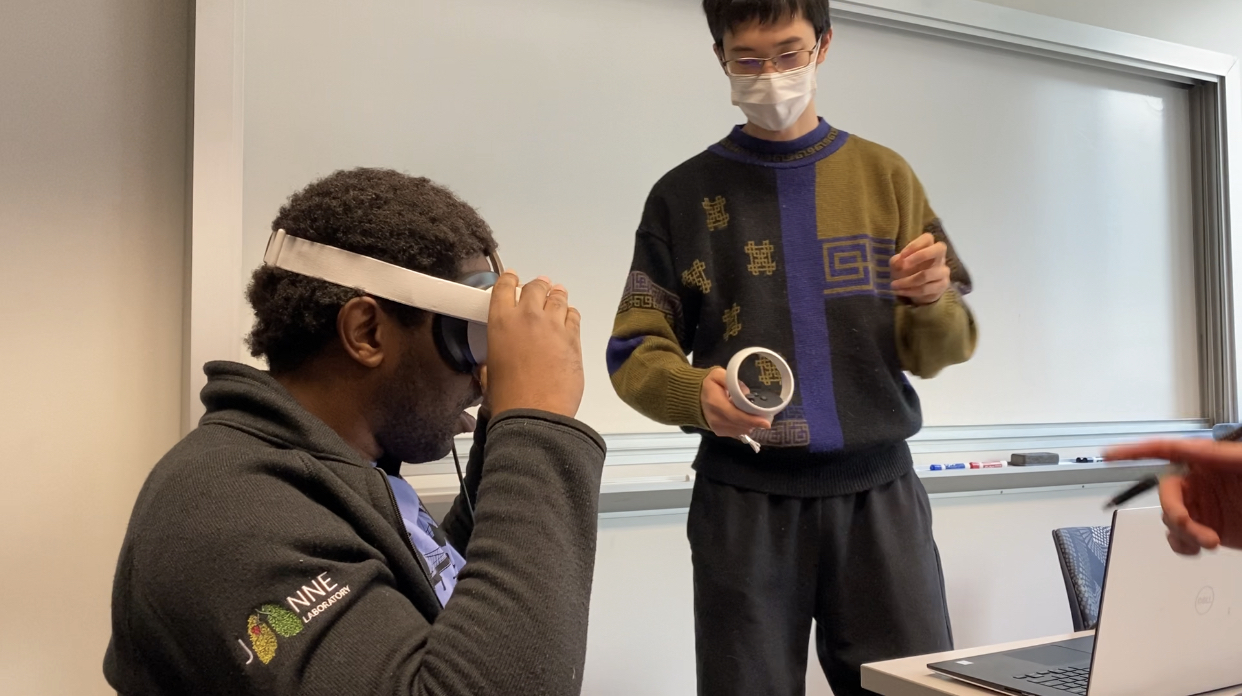

Thanks to the faculty and ETC department efforts, we have a playtest day on the weekend during the mid-semester, which hosts dozens of groups with various demographic guests. The playtest day is such a challenge and a gift for the team to know better about the product’s limitations, the intuition of gameplay, and the excitement level of the experience. Following the schedule, we finished our playable prototype for playtesting one week in advance. To rehearse the playtesting, we decide more guests individually to come and play for this whole week. Considering our target audience is medical students, we contacted the medical school through the clients and received three of their responses for the invitation.

Medical students follow our expectations well. They usually repeat what the patient said and follow up with the correct reactions of caring patient’s concern. Through the experience, guests generally think they better understand the scenarios and feel they are actively listening or leading questions in the interview. Each response from medical students would be longer than expected cause they used to(and they are taught to) repeat what the patient told them. This standard process makes the problem to our system since we set a too short period for their reaction. Our current AI Chatbot will automatically split the sentences longer than five seconds and use them as two queries in the conversation tree.

Therefore, we decided to expand the time constraint for the player’s speeches. Also, during playing the patient’s response clips, we will no longer handle the interruption. Suppose AI Chatbot keeps listening all the time. In that case, any unfinished sentences from the last response and natural acknowledgment will be detected as an interruption, and systems will cut off the clips in the middle and cause the breaks.

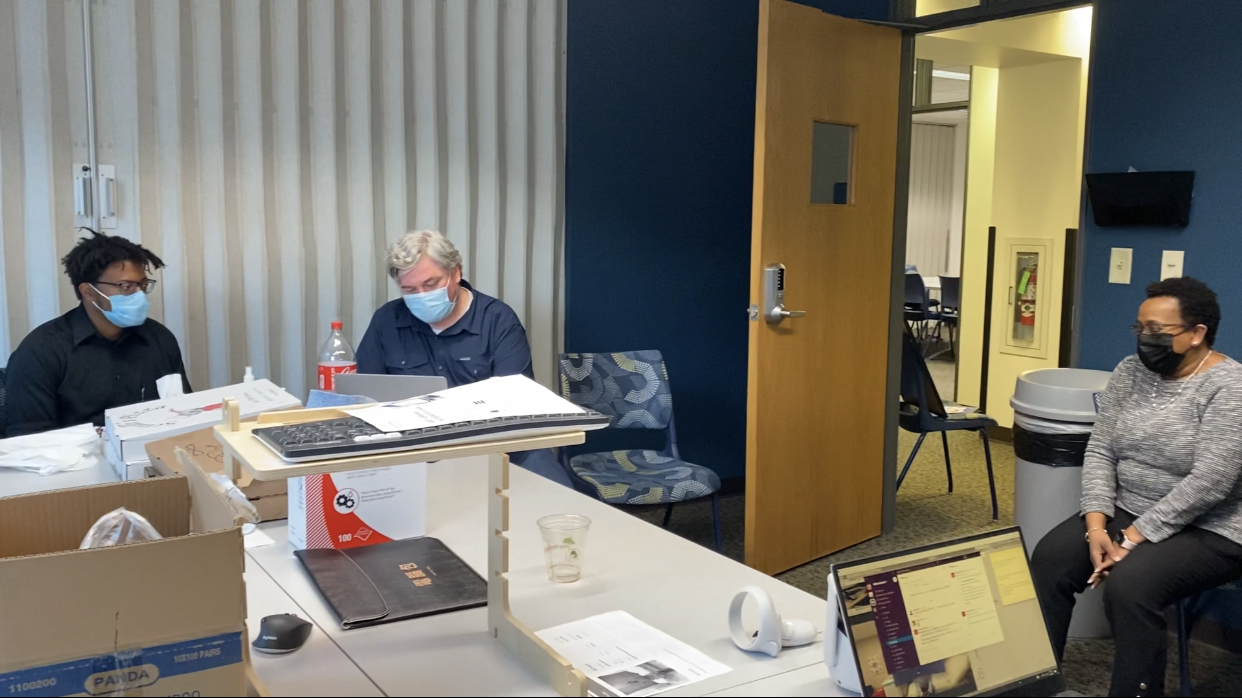

Preparation and Flow Design

Overall, cleaning up the room is necessary for safety and tidiness. We show the poster and cards at the entrance of the project room. Using the whiteboards and working boards, decorate the room and cover something you don’t want to show. When the guests arrive, we will lead them from the main gate to the room. Starting with gracefulness and a short introduction of the project, the playtest should begin as follows. During the playtest, recording footage and taking notes are essential. After the playthrough, we will bring out the survey/questionnaire to the guests, which probably takes ten minutes to finish, accompanying the interview in small talks. The know-how of designing the survey has its domain. Please consult advisors as soon as possible!

Guideline before you play

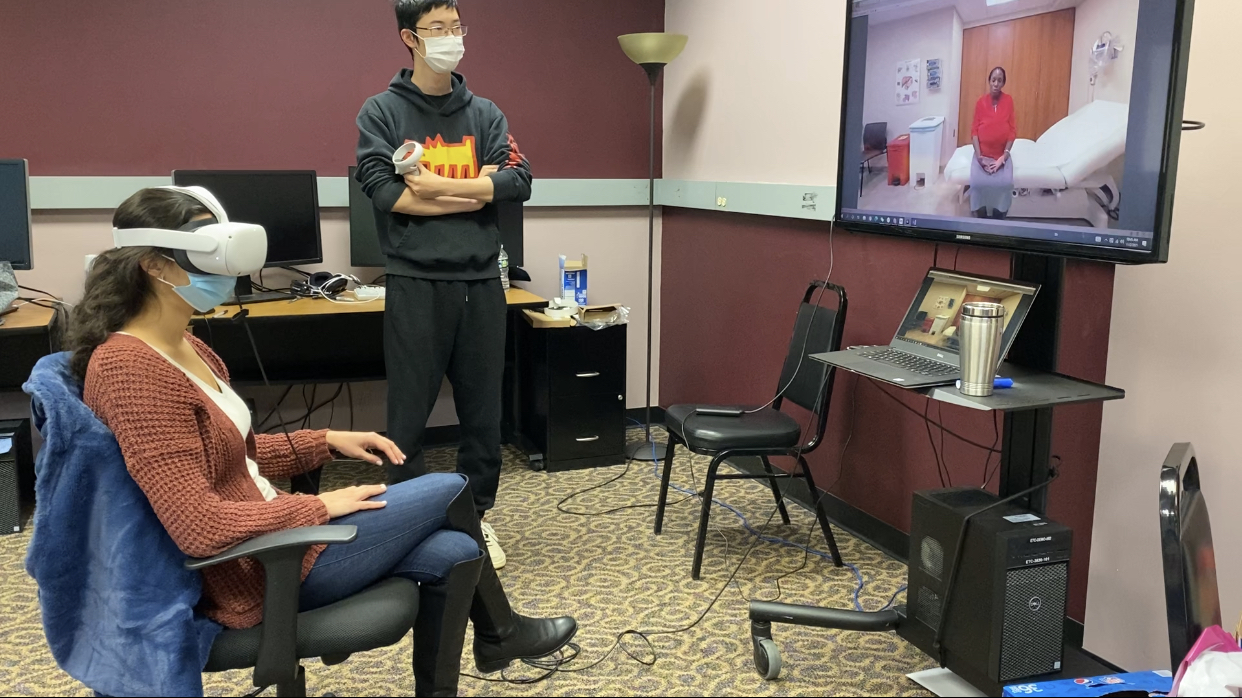

Another gain from the pre-playtest is we learn to give some guidelines before the training experience. First, we will show the patient profile at the beginning, so guests will have a basic idea of what they are going to face in the virtual world. Considering the VR world is immersive and isolated, the expectation helps guests a lot. Second, the guideline for their experience is, “Don’t be a doctor, be a human being.” This is quoted from one of our faculty members. We have a discussion about naive guests(without healthcare training) who usually would be nervous about how to ask questions like a doctor. However, we are an experience for empathy instead of doctor simulation. The true goal is to care for the patient’s feelings rather than diagnosis.

Playtest Day!

Generally, we received a lot of positive feedback and confidence from guests. Cross-fade works well, so people don’t feel weird when switching the video clips. The shift and the latency are somewhere between subtle and noticeable, depending on the guests. Overall, it’s not too annoying to break the immersive experience. The main problem is about understanding. Naive guests sometimes ask something out of scope. Fortunately, we set up a case to catch up on these situations when AI Chatbot can’t understand speech at all. Considering it’s a patient-leading conversation, the experience could usually be finished, but sometimes guests feel the virtual patient didn’t understand them well somewhere. We thought it should because we use keywords to detect the user’s inputs and play the pre-recorded clips which match the most. Therefore, when people bring out multiple keywords at once, we cannot guarantee how AI Chatbot interprets their responses. Usually, AI Chatbot will play a clip that is not exactly but almost fits the context. Theoretically, with more playtest data and working time, we could manually manage the conversation tree as comprehensively as possible for handling any potential response. However, when the complexity of the conversation tree grows, the difficulty of maintenance increases, too. We try to strike the balance carefully.

We appreciate the opportunity to playtest with so many different people and gather their perspectives and data for the iteration.